Heads-up (pun intended)

Heads-up (pun intended)

This is not my typical code-with-sample story - this a war story from the front line of

Windows Mixed Reality development. How did I get here, what did I learn, what mistakes did I make, what scars I have to show, and how did I win in the end.

The end

On the evening (CET) of Tuesday, October 10, 2017, Kevin Gallo - VP of Windows Developer Platform - announced in London the release of the SDK for the Windows 10 Fall Creators Update and the opening of the Windows Store for apps targeting that release - including Mixed Reality apps.

Mere hours after that - Thursday had just arrived in the Netherlands - an updated version of Walk the World with added support for Windows Mixed Reality passed certification, and became available for download in the store on Friday the 13th around 8:30pm CET. I was able to download, install and verify it was working as I expected. Four days before the actual official rollout of the FCU including the Mixed Reality portal, I was in the Store. Against all odds, I not only managed to make my app available, but also get it available as a launch title.

Achievement unlocked ;)

What happened before

On June 28th, 2017, I was invited to Unity Unite Europe 2017 by Microsoftie Desiree Lockwood who I met numerous times in the cause of becoming an MVP. Not having to fly 9 hours to meet an old friend but only to have to take a short hop on a train, I gladly accepted. On a whim, I decided to bring my HoloLens with Walk the World for HoloLens loaded on it. We had lunch and I demoed the app, showing Mount Rainier about 4 meters high, in a side building. That apparently made quite an impression. Talk moved quickly to the FCU Mixed Reality, and how much work it would be to make my app available for MR as well. In a very uncharacteristically moment of hubris I said "you get me a head set, I will get you this app". I got guidance on how to pitch my app, I did follow the instructions, and July 27th the head set arrived.

Suddenly it was time to make sure I lived up to my big words.

Challenge 1: hardware

My venerable old development PC, dating back to 2011, had a video card that in no way in Hades would be able to drive a headset. I could get a 2nd hand video card and the PC, running the Creators Update AKA RS2, said it was ready to rock. So I happily enabled a dual boot config, added the Fall Creators Update Insiders preview, and then ran into my first snag.

On the Creators Update the Mixed Reality portal is nothing more than a preview, the preview ran nice on my old PC, but the upcoming production version apparently not. Maybe I should have read this part of the Mixed Reality development documentation better. Not only the GPU did not cut it by far, but the CPU was way too old. So with a head set on the way, I was looking at this.

Fortunately, one of my colleagues is an avid gamer. She and her husband took a look at the specs, and built an amazing PC for me in a like days. It’s specs are:

- CPU: AMD Ryzen 7 1700, 3.0 GHz (3,7 GHz Turbo Boost) socket AM4 processor

- Graphics card: Gigabyte GeForce GTX 1070 G1 Gaming 8GB

- Motherboard: ASUS PRIME B350-PLUS, socket AM4

- Memory: Corsair 16 GB DDR4-3000

- Storage: Crucial MX300 1TB M.2

- Power supply: Seasonic Focus Plus Gold 650W

This is built into a Fractal Design Core 2500 Tower with an extra Fractal Design Silent Series R3 120mm Case fan. My involvement in the actual creation of this monster was supplying maximum physical dimensions and entering payment details. Software is my shtick, not hardware. But I can tell you this device runs Windows Mixed Reality like a charm, and very stable, too. Thanks Alexandra and Miles!

Lesson 1: RTFM, and then wait till the FM is indeed final before making assumptions.

Lesson 2: don’t skimp on hardware especially when you are aiming for development.

Challenge 2: tools in flux

Developing for Windows Mixed Reality in early August 2017 was a bit of a challenge. Five factors where in play:

- The Fall Creators Update Insiders preview

- The Mixed Reality Portal

- Visual Studio 2017.x (a few updates came out during the timeframe)

- Unity 2017 (numerous versions)

- The HoloToolkit (halfway rechristened the Mixed Reality Toolkit) – or actually, the development branch for Mixed Reality.

Only when all these five stars aligned, things would actually work together. Only three of the stars were in Microsoft’s control – Unity is of course made by Unity, and the Mixed Reality Toolkit is an open source project only partially driven by Microsoft. Four of them were very much in flux. A new version comes out for one of these stars, and the whole constellation starts to wobble. Fun things I had to deal with were, amongst others:

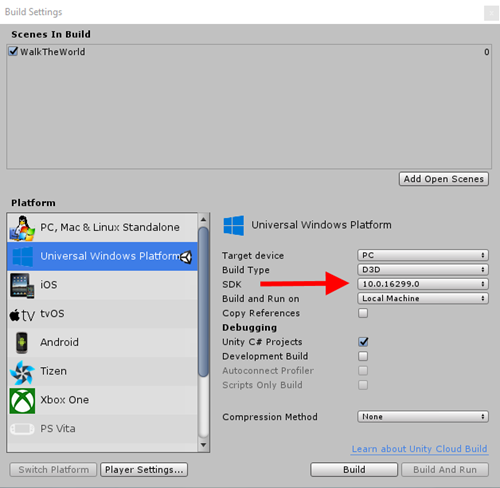

For quite some time, Unity could not generate Unity C# projects, but only ‘player’ projects. Which meant debugging was nearly impossible. But it also made it a fun second-guessing-the-compiler game, kind of like in the very old day before live debugging (yes kids, that’s how long I have been developing software). Effectively, this meant I had to leave the "Unity C# Projects" checkbox unchecked in the build settings, because it created something the compiler did not want - let alone it being deployable.

For quite some time, Unity could not generate Unity C# projects, but only ‘player’ projects. Which meant debugging was nearly impossible. But it also made it a fun second-guessing-the-compiler game, kind of like in the very old day before live debugging (yes kids, that’s how long I have been developing software). Effectively, this meant I had to leave the "Unity C# Projects" checkbox unchecked in the build settings, because it created something the compiler did not want - let alone it being deployable.- An update in Visual Studio 2017 made it impossible to run Unity generated projects unless you manually edited project.lock.json or downgraded Visual Studio (which, in the end, I did).

- Apps ran only once for a while, then you had to reset the MR portal. Or only showed a black screen. Next time they ran flawlessly. This was caused by a video card driver crash. This was, in a matter of speaking, a 6th star in the constellation that fortunately quickly disappeared.

- For a while, I could not start apps from Visual Studio. I could only start them from the start menu. And only from the desktop start menu. Not from the MR start menu.

- The Mixed Reality version and the HoloLens version of the app got quite far out of sync at one point.

Lesson 3: on the bleeding edge is where you suffer pain. But you also get the biggest gain. And this is were the community’s biggest strengths come to light.

A tale of two tool sets

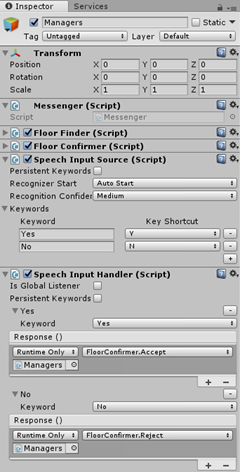

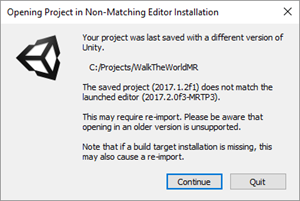

I wanted to move forward with Mixed Reality, but at the same time I wanted to maintain the integrity of the HoloLens version. So although the sources I wrote myself remained virtually the same, at one point the versions of Unity, the Mixed Reality Toolkit and even the Visual Studio versions I needed were different. For now I used for HoloLens development:

- Visual Studio 2017 15.3.5

- Unity 2017.1.1f1

- The Mixed Reality Toolkit master branch.

For Mixed Reality development I used:

- Visual Studio 2017 15.4.0 preview 5

- Unity 2017.2.0f1

- The Mixed Reality Toolkit Dev_Unity_2017.2.0 branch

Why a Visual Studio Preview? Well that particular preview contained the 16299 SDK (as fellow MVP Oren Novotny pointed out in a tweet), and although I did not know for sure 16299 would be the FCU indeed, I decided to go for it. Late afternoon (CET) of Sunday, October 8, I built the package, pressed it trough the WACK, and submitted it. And as I wrote before, it sneaked through shortly after the Store was declared open, becoming an unplanned Mixed Reality release title. Unplanned by Microsoft, that is. It was definitely planned by me. ;)

In the mean time, things are till changing, see this screen shot from the HoloDeveloper Slack group,which I highly recommend joining, especially the immersive_hmd_info and mrtoolkit_holotoolkit channels as these give a lot of up-to-date information on the five-star-constellation changes and wobbles:

Lesson 4: Keep tightly track of your tool versions

Lesson 5: Join the HoloDeveloper slack channel (and this means something from a self-proclaimed NOT fan of Slack;) )

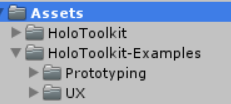

A tale of two source trees

As I already mentioned, I needed to use two versions of the Mixed Reality Toolkit. These are distributed in the form on Unity packages, which means they insert themselves into the source of your app, as source. It’s not like you reference an assembly or a NuGet package. This had a kind of nasty consequence – if I wanted to move forward and keep my HoloLens app intact for the moment, I had to make a a separate branch for Mixed Reality development, which was exactly what I did. So although the sources I wrote for my app are virtually the same, there was a different toolkit in my sources. Wow, did Microsoft mess up this one, right?

No. Not at all. Think with me.

- I have a master branch that is based upon the master branch of the Mixed Reality Toolkit – this contains my HoloLens app

- I have an MR branch based upon the Dev_Unity_2017.2.0 branch – this is the Mixed Reality variant. In this branch sits all the intelligence that makes the app work on a HoloLens and an immersive headset.

- At one point the stars will align to a point where I can use one version of everything (most notably, Unity, which keeps on being a wild card in this constellation) to generate an app that will work on all devices. Presumably the Mixed Reality Dev_Unity_2017.2.0 branch will become the master branch. Then I will not merge to my master branch – that will be deleted. My MR branch will be based upon the latest stuff and will become the source of everything.

Changes in code and Unity objects

Preprocessing directives

In the phase that I could not create Unity C# projects - hence debuggable projects - it seemed to me that that UWP code within #if UNITY_UWP compiler directives did not get to be executed in the not-debuggable projects that were the only thing I could generate. Peeking in the HoloToolkit - I beg your pardon - Mixed Reality Toolkit I saw the all UNITY_UWP compiler directives were gone, and several others were used. I tried WINDOWS_UWP and lo and behold - it worked. Wanting to keep backwards compatibility I changed all the #if UNITY_UWP directives to #if UNITY_UWP || WINDOWS_UWP. I am not really sure it's still necessary - looking in the Visual Studio solution build configuration now, I see both conditionals defined. I decided to leave it there.

Camera

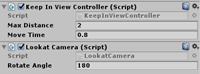

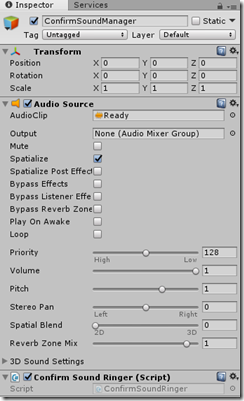

Next to the tried and trusted HoloLensCamera, there's now the MixedRealityCamera. This also includes support for controllers and stuff. What you need to do is to disable (or remove) the HoloLensCamera and add a MixedRealityCameraParent:

This includes the actual camera, the in-app-controller display (just like in the Cliff House, and it looks really cool) as well as default floor - a kind of bluish square that appears on ground level. I thinks it's apparent size is about 7x7 meters, but I did not check. As Walk the World has it's own 'floor' - a 6.5x6.5 meter map, I did not need that so I disabled that portion.

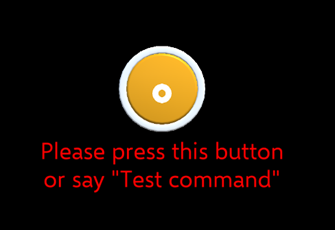

Runtime headset checking - for defining the floor

I am not sure about this one - but when running a HoloLens app, position (0,0,0) is the place where the HoloLens is at the start of the app. That is why my Walk the World for HoloLens starts up with a prompt for you to identify the floor. That way, I can determine your length and decide how much below your head I need to place the map to make it appear on the floor. Simply a matter of sending a Raycast, having it intersect with the Spatial Mapping at least 1 meter below the user's head, and go from there. I will blog about this soon. In fact, I have already started doing so, but then this came around.

First of all, we don't have Spatial Mapping in an immersive headset. But experimenting I found out that (0,0,0) is not the user's head position but apparently the floor directly beneath the headset on startup. This makes life a whole lot easier. I just check

if(Windows.Graphics.Holographic.HolographicDisplay.GetDefault().IsOpaque)

then skip the whole floor finding experience, make the initial map at (0,0,0) and I am done.

Stupid tight loops

In my HoloLens app I got away with calling this on startup.

private bool CheckAllowSomeUrl()

{

var checkLoader = new WWW("http://someurl");

while(!checkLoader.isDone);

return checkLoader.text == "true";

}This worked, as it was in the class that was used to build the map. In the HoloLens app was not used until the user had defined the floor so it had plenty of time to do it's thing. Now, this line was called almost immediately after app startup and the whole thing got stuck in a tight loop and I only got a black screen.

In the mean time, I have upgraded the Unity version that builds the HoloLens app from 5.6x to 2017.1.x and this problem occurs there now as well. Yeah, I know it's stupid. I wrote this quite some time, it worked, and I forgot about it. Thou shalt use yield. Try to pinpoint this while you cannot debug.

Skybox

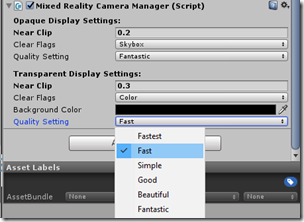

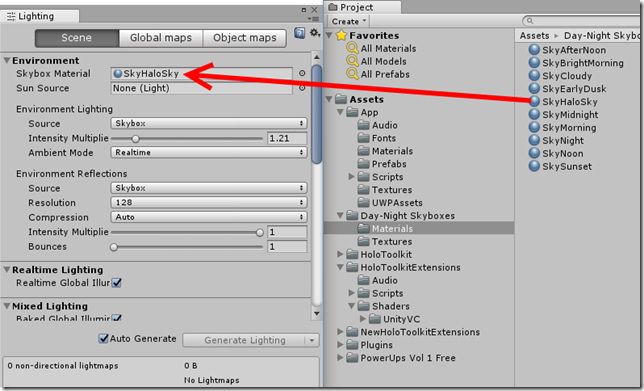

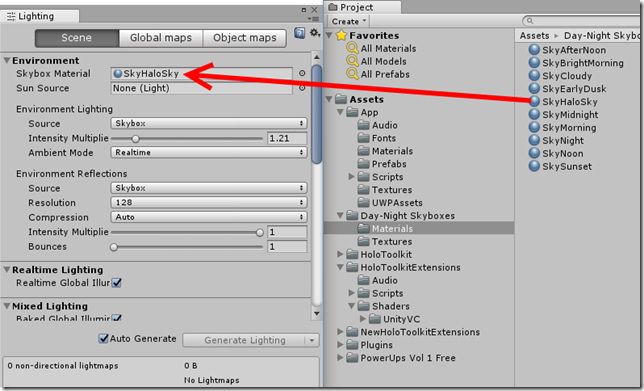

A HoloLens app has a black Skybox, as it does not need to generate an virtual environment - it's environment is reality. An immersive head set does not have that, so in order to prevent the user having the feeling to float in and empty dark space, you have to provide for some context. Now Unity has a default Skybox, but according to a Microsoft friend who helped me out, using the default Skybox is Not A Good Thing and the hallmark of low quality apps. Since I only ever made HoloLens apps, this never occurred to me. With the aid of the HoloDeveloper slack channel I selected this package of Skyboxes and selected the HaloSky, which gives a nice half-overcast sky.

Coming from HoloLens, having never had to bother with Skyboxes before, you can spend quite some time looking for how the hell you are supposed to set one. I am assume it's all very logical for Unity buffs, but the fact is that you don't have to look in the Scene or the Camera - the most logical place to look after all - but you have to select Windows/Lighting/Settings from the main window. That will give a popup where you can drag the Skybox material in.

You can find this in the documentation, in this page that is titled "How do I Make a Skybox?" but since I did not want to make one, just use one it took me a while to find it. I find this confusing wording rather typical for Unity documentation. The irony is that the page itself is called "HOWTO-UseSkybox.html"

Upgrading can be fun - but not always

At one point I had to upgrade from Unity 5.6.x to 2017.1.x and later 2017.2.x. I have no idea what exactly happened and how, but at some point some settings I had changed from default in some of my components in the Unity editor got reverted to default. This was fortunately easy to track down with a diff using TortoiseGit. I also noticed my Store Icon got reverted to it's default value - no idea why or how, but still.

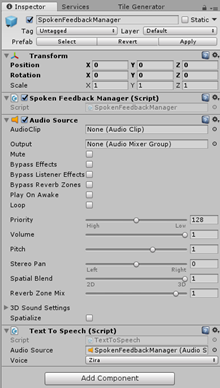

In the cause of upgrading, you will also notice some name spaces have changed in Unit. For instance, everything that used to be in UnityEngine.VR.WSA in now in UnityEngine.XR.WSA. Similar things happened in the Mixed Reality Toolkit. For reasons I don't quite understand, using a TextToSpeechManager can now only be used by calling from the main thread. For extra fun, in a later release it's name changed into TextToSpeech (sans "Manager") and the method name changed a little too.

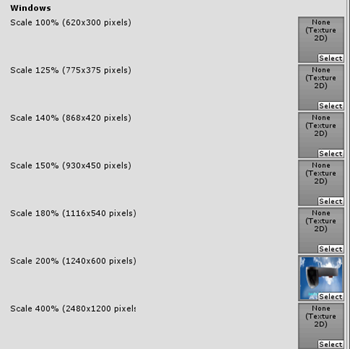

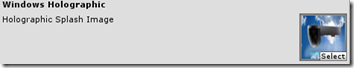

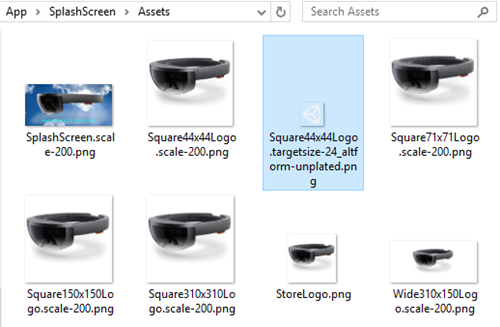

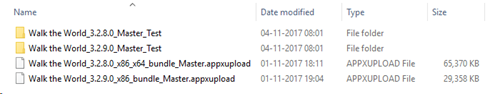

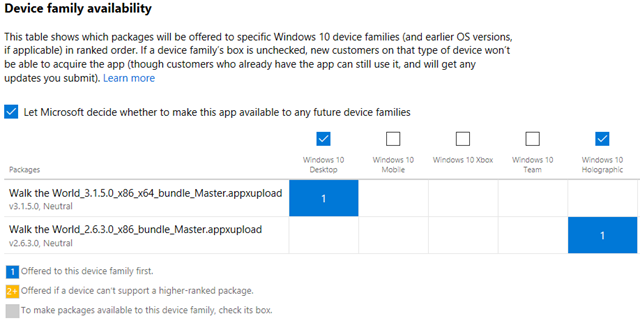

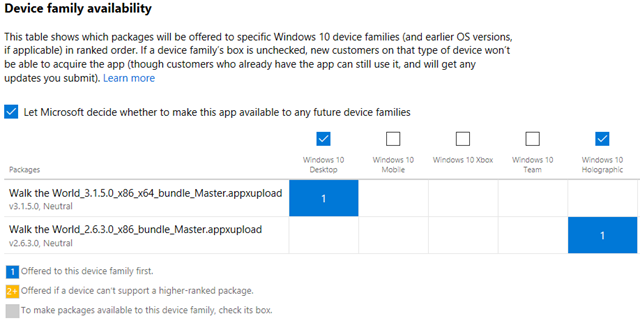

Submitting for multiple device families

Having only submitted either all-device-type supported UWP apps or Hololens apps that, well, only ran on HoloLens, I was a bit puzzled how to go about making various packages for separate device families. I wanted to have an x86 based package for HoloLens, and an x86/x64 package for Windows Mixed Reality. I actually built those on different machine and I also gave them different version numbers.

But whatever I tried, I could not get this to work. If I checked both the checkbox for Holographic and Windows, the portal said it would offer both packages on both platforms depending on capabilities. I don't know if that would have caused any problems, but I got a tip from my awesome friend Matteo Pagani that I should dig into the Package.appxmanifest manually.

In my original Package.appmanifest it said:

<Dependencies>

<TargetDeviceFamily Name="Windows.Universal" MinVersion="10.0.10240.0"

MaxVersionTested="10.0.15063.0" />

</Dependencies>For my HoloLens app, I changed that into

<Dependencies>

<TargetDeviceFamily Name="Windows.Holographic" MinVersion="10.0.10240.0"

MaxVersionTested="10.0.15063.0" />

</Dependencies>For my Mixed Reality app, I changed that into

<Dependencies>

<TargetDeviceFamily Name="Windows.Desktop" MinVersion="10.0.16299.0"

MaxVersionTested="10.0.16299.0" />

</Dependencies>And then I got the results I wanted, and I was absolutely sure the right packages were offered to the right (and capable) devices only.

Setting some more submission options

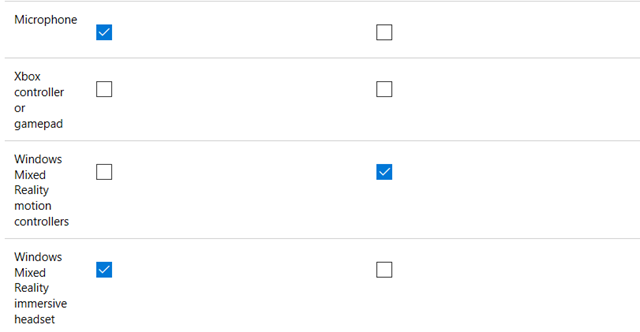

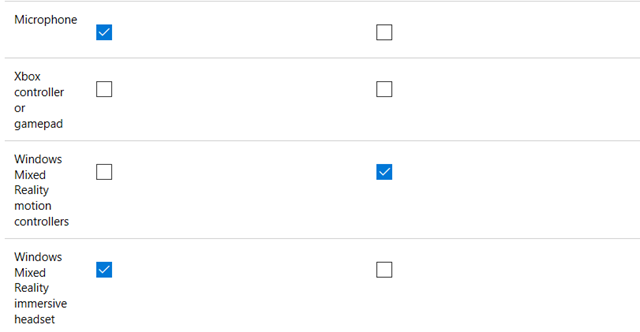

From the same Microsoft friend who alerted me to my Skybox issues I also got some hints on how to submit a proper Mixed Reality head set app. There were a lot of options I was not ever aware of. Under "Properties", for instance, I set this

as well as this under "System requirements" (left is minimum hardware, right is recommended hardware)

Actually, you should set a lot more settings considering the minimal specs for the PC. Detailed instructions can be found here, including the ones I just discussed ;)

Conclusion

It was a rocky ride but a fun one too. I spent an insane amount of time wrestling with unfinished tools, but seeing my app work on the Mixed Reality headset for the very first time was an adrenaline high I will not forget easily. Even better was the fact I managed to sneak in the back door to get my app in the Store ready for the Fall Creators Update launch - that was a huge victory.

In the end, I did it all myself, but I could not have gotten there without the help of all the people I already mentioned, not to mention some heroes from the Slack Channel, particularly Lance McCarthy and Jesse McCulloch who were always there to get me unstuck.

In hindsight, Mixed Realty development is not harder than HoloLens development. In fact, I'd call it easier because you are not constrained by device limits, deployment and testing goes faster, and the Mixed Reality Toolkit evolved to a point where things get insanely easy. Nearly all my woes where caused by my stubborn determination to be there right out of the gate, so I had to use tools that still had severe issues. Now stuff is fleshed out, there's not nearly as much pain. The fun things is, when all is said and done, HoloLens apps and Mixed Reality apps are very much the same. Microsoft vision for a platform for 3D apps is really becoming true. You can re-use your HoloLens knowledge for Mixed Reality - and vice versa. Which brings us to:

Lesson 6: if you thought HoloLens development was too expensive for you, get yourself a headset and a PC to go with it. It's insanely fun and a completely new, exiting and nearly empty market is waiting for you!

Enjoy!